This section describes the (Storage Autoscaling) solution.

In K8s, a PV is a piece of storage provisioned by an administrator that can be dynamically or statically provisioned. It exists independently of the Pod's lifecycle and can be attached to and detached from Pods as needed.

In dynamic and evolving environments, the storage requirements of applications can vary over time. Storage Autoscaling ensures that your workloads have the necessary storage resources when demand increases, and conversely, reclaims resources when demand decreases. This capability aligns with the broader K8s philosophy of automating infrastructure management.

K8s StorageClasses define the properties and behaviors of PVs. Storage Autoscaling is implemented using a new storage class that uses the standard AWS storage classes to autoscale PVs.

Storage Autoscaling brings agility and efficiency to storage management in dynamic, containerized environments.

Supported configurations

StatefulSet workloads are fully supported.

Other workloads can be used with beta support.Limit of up to 5 pods per node (due to a limit of 27 volumes per node).

Volume snapshots are not currently supported.

System architecture

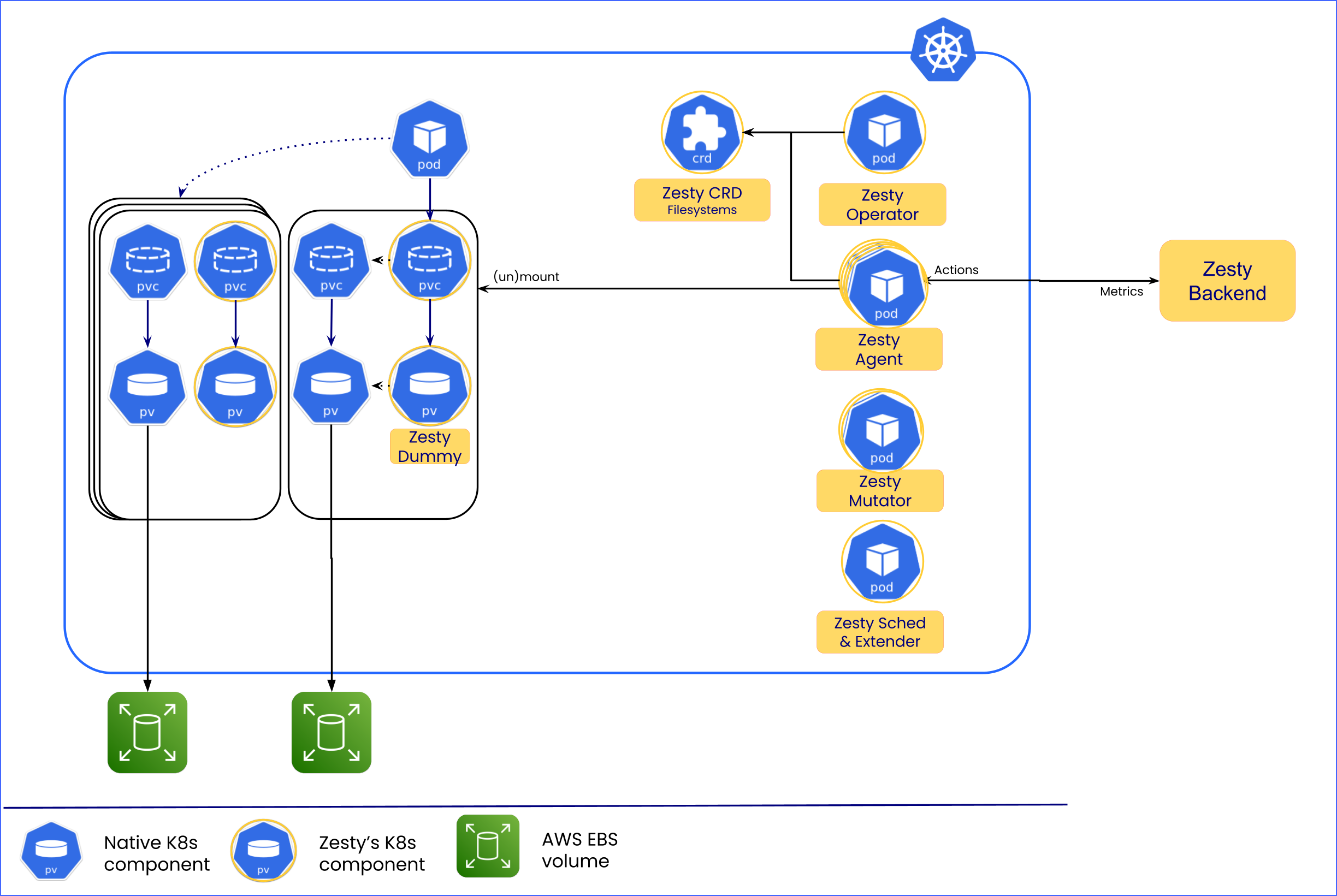

The following diagram shows the Storage Autoscaling architecture:

These components are installed with the installation:

Zesty Storage Operator: Provisions all the required K8s resources: PVCs, PVs, and volume attachments (VAs).

FileSystem CustomResourceDefinition (CRD): A Zesty custom resource that retains the state of the filesystem.

Zesty Storage Agent: This is configured as a DaemonSet, which means that one exists on each node in the cluster. The Agent performs all the filesystem operations (mount, umount, add, extend, and shrink) and exposes filesystem metrics to Prometheus.

Zesty Storage Mutator: A mutating webhook that changes the scheduler for every workload in the cluster. When a new workload is deployed, the mutator receives an event that signals it to change the scheduler from the default to the Zesty Scheduler & Extender.

Zesty Scheduler & Extender (“S&E”): This is the exact same as the default EKS scheduler (the one that matches the cluster version from EKS repo) with the addition of the Zesty Extender.

The default EKS scheduler assumes that each PVC has only 1 EBS volume, but in the Storage Autoscaling solution, which is built on multiple PVCs, there can be multiple volumes.

When a node fails and its Pods need to be reassigned, the default scheduler may not find a suitable PVC, because it is looking for a node with at least the same amount of empty slots that matches the amount of needed volume mounts.

The Zesty Extender is aware of the actual amount of EBS volumes and can make the reassignments.

Nodes, Pods, PVs, and PVCs are standard K8s elements. In addition, for every PVC with Zesty’s storage class that is requested, Zesty adds an abstraction layer PVC and a filesystem custom resource for managing the multiple standard PVCs related to this request.

The annotations, node affinity rules, reclaim policy, etc. are defined in the original PVC. This information will be propagated to the dummy PV and to the filesystem as needed. This is all standard for K8s.

System workflows

When creating a PVC with Zesty’s storage class, a PV of that storage class will be provisioned and managed by the Zesty Storage Operator. We refer to this PV as a dummy PV, because it doesn’t actually hold data, and is only used to manage the autoscaling.

After creating the dummy volume, the Zesty Storage Operator creates PVCs with standard storage class (for example, ebs-sc) which are the regular PVCs managed by the AWS CSI. The AWS standard storage class volumes are created and mounted onto the Pods using the Zesty Storage Agent.

When a PVC with Zesty’s storage class (zesty-sc) is created, the following resources are also created:

Filesystem: a custom resource (CR) of Filesystem type to represent the filesystem and the volumes that may be created to satisfy this request.

Dummy PVs: a resource to satisfy the PVC that K8s created for this request.

The dummy PV is the only resource that the K8s scheduler is aware of in regards to Pod scheduling.

Standard storage class PV: satisfies the original requests by creating a PV using the standard CSI controller.

Once the standard PV is created and attached to the node, the Zesty Storage Agent on the node is responsible for:

Formatting the PV to Zesty filesystem

Mounting the volume to the appropriate filesystem

The Zesty Storage Agent is aware of the filesystem and the PVs by listening to the Filesystem CRs relevant to the Pods running on that node.

Pod initialization

When a Pod is associated with the Zesty storage class, the Zesty mutator modifies the Pod's scheduler to the Zesty Storage Scheduler & Extender (“S&E”). This ensures that during future Pod migrations, the S&E accurately accounts for the actual number of volumes attached to each node.

Pod migration

When a Pod migrates between nodes, the Zesty Storage Operator detaches the volumes from the node where the Pod is migrating from. The Pod is then scheduled to a new node by the K8s scheduler, while the S&E helps the K8s scheduler understand which is the right node to move the volume to. Once the Pod is scheduled to a new node, the Zesty Storage Operator will attach the volumes to the new nodes (by utilizing the CSI), and the Zesty Storage Agent will mount the volumes to the corresponding Pod based on the configuration in the Filesystem CR. Only after all the PVs are mounted will the status of the Pod change to Running.